How To Debug Node JS Inside Docker?

Debug your way into docker containers

Photo by Ian Taylor on Unsplash

What is a Debugger?

For any developer, the debugger is the best friend. One can easily find bugs in software with a debugger.

One can add a breakpoint to pause execution. Secondly, one can also add logic to a breakpoint to halt the execution. As an example, consider a for loop having 1,000 iterations. The execution should stop when the iteration count reaches above 100. To do so, put a breakpoint on the for loop. Next, add the logic to halt the execution when the iteration goes above 100.

Besides halting a program, debuggers show memory allocations. For example, halting the execution will show memory consumed at any given point.

What Is a Remote Debugger?

Debugging is usually done on a localhost. Doing it remotely is called remote debugging :). That is, if you debug software running on a remote host, its called remote debugging. It is helpful for multiple reasons.

For one, one can debug software locally. Consider a scenario where software is on the cloud. It might be deployed either for dev, UAT, or production. Now an issue happens on the cloud but not on the localhost. In this case, it would be very helpful to connect to the cloud and attach the debugger to the process. One can execute the software line by line to evaluate the issue and fix it.

Secondly, remote debugging is also useful when the software is running inside a container. Let’s say a project is running inside Docker. One won’t be directly able to run the project and connect to it via the debugger. Instead, the docker container should expose its container port. Secondly, the remote debugger needs configuration to connect the project inside the docker container.

Docker helps create portable containers that are fast and easy to deploy on various machines. These containers can be run locally on your Windows, Mac & Linux. Also, major cloud systems like AWS or Azure do support them out of the box. If you want to learn more Docker basics and need a cheat sheet for Docker CLI, here is an introductory article about it.

In this article, we will set up a NodeJS project to run inside a docker container. We will also set up a remote debugging for the project.

If you love this article so far, please follow me and do check out other such awesome articles on my profile.

Setting Up the Project

Prerequisites

Before we move further, the system should have docker desktop and VS Code installed. Other than that, no other requirements are there.

For the hasty ones, I have made the source code available as a repository. You can check it out here.

Creating Project Files

We are going to create a very simple express Node JS project. It will simply return a static JSON string on opening a specific URL. For this, we will create a file named server.js, which is the entry point to our project.

Create a server.js file with the following contents:

const server = require("express")();

server.listen(3000, async () => { });

server.get("/node-app", async (_, response) => {

response.json({ "node": "app" });

});

The server.js file states that display {“node”: “app”} on opening http://localhost:3000/node-app URL in the browser.

Secondly, we will need a package.json file to configure the project and add dependencies. For that, create a package.json file with the following content:

{

"name": "node-app",

"dependencies": {

"express": "^4.17.1"

}

}

Run the npm install command to install the dependencies locally. This will create a node_modules in the project directory.

Even though we will be running the project inside a container, the dependencies need to be installed. It is needed since we will be mapping our current project directory to a container project directory. It is explained below how to do so.

Running as Docker Container

A Dockerfile is needed to run the project as a docker container. Create a Dockerfile with the following contents:

# Download the slim version of node

FROM node:17-slim

# Needed for monitoring any file changes

RUN npm install -g nodemon

# Set the work directory to app folder.

# We will be copying our code here

WORKDIR /node

#Copy all files from current directory to the container

COPY . .

# Needed for production. Check comments below

RUN npm install

Here, the project is set up to run as a simple node server without allowing any breakpoints. The container will be running the project out of a node directory inside the container. nodemon is installed globally in the container. It’s needed for watching any file change in the directory. It is explained in detail below.

The RUN npm install command is needed only when deploying to production. We will map the /node directory of our container to the current project directory on localhost using Docker Compose (next section). But when the app is deployed on the container, it needs to install the dependencies on its own.

Docker Ignore

The Docker ignore feature is very much similar to git ignore. .gitignore doesn’t track the files or folders mentioned in it. Similarly, we don’t want to copy unnecessary files in the container, which takes up space.

In our case, we don’t want to copy the node_modules folder to the container. To do so, create a .dockerignore file in the project directory with the following contents:

node_modules/

Docker Compose

Docker Compose is a really helpful way to build and run docker containers with a single command. It is also helpful for running multiple containers at the same time. It is one of the reasons we use docker compose instead of plain docker. To know more about docker compose and how to run multiple containers, please visit the article Run Multiple Containers With Docker Compose.

Now, let’s create a docker-compose.yml file to add some more configurations. Add the below contents to docker-compose.yml file once created:

version: '3.4'

services:

node-app:

# 1. build the current directory

build: .

# 2. Run the project using nodemon, for monitoring file changes

# Run the debugger on 9229 port

command: nodemon --inspect=0.0.0.0:9229 /node/server.js 3000

volumes:

# 3. Bind the current directory on local machine with /node inside the container.

- .:/node

ports:

# 4. map the 3000 and 9229 ports of container and host

- "3000:3000"

- "9229:9229"

The docker-compose.yml file is explained point-wise below.

Point to our current directory for building the project.

Run the project using nodemon, since if there are any changes in the local directory, we want to restart the project in the docker with the changes. Nodemon is a utility that will monitor for any changes in your source and automatically restart your server.

Bind our current directory to the /node directory using volumes.

In addition to exposing and binding the 3000 port for the server, expose the 9229 for attaching the debugger.

Use the above docker-compose.yml file only for debugging.

The above docker-compose.yml exposes the debug port. In addition, it also monitors for any file changes inside the container (which are not going to happen). Lastly, it maps the volumes of the container to the project directory.

For production, create a new file docker-compose-prod.yml with the following contents:

version: '3.4'

services:

node-app:

build: .

command: node /node/server.js 3000

ports:

- "3000:3000"

It simply runs the project and exposes the 3000 port. We are using multiple docker compose files to manage separate environments. Check the Running a Project section below to understand how to run a project based on different docker compose files.

Before we can run the project, we still need to configure the debugger to connect to the container.

Configure a Remote Debugger

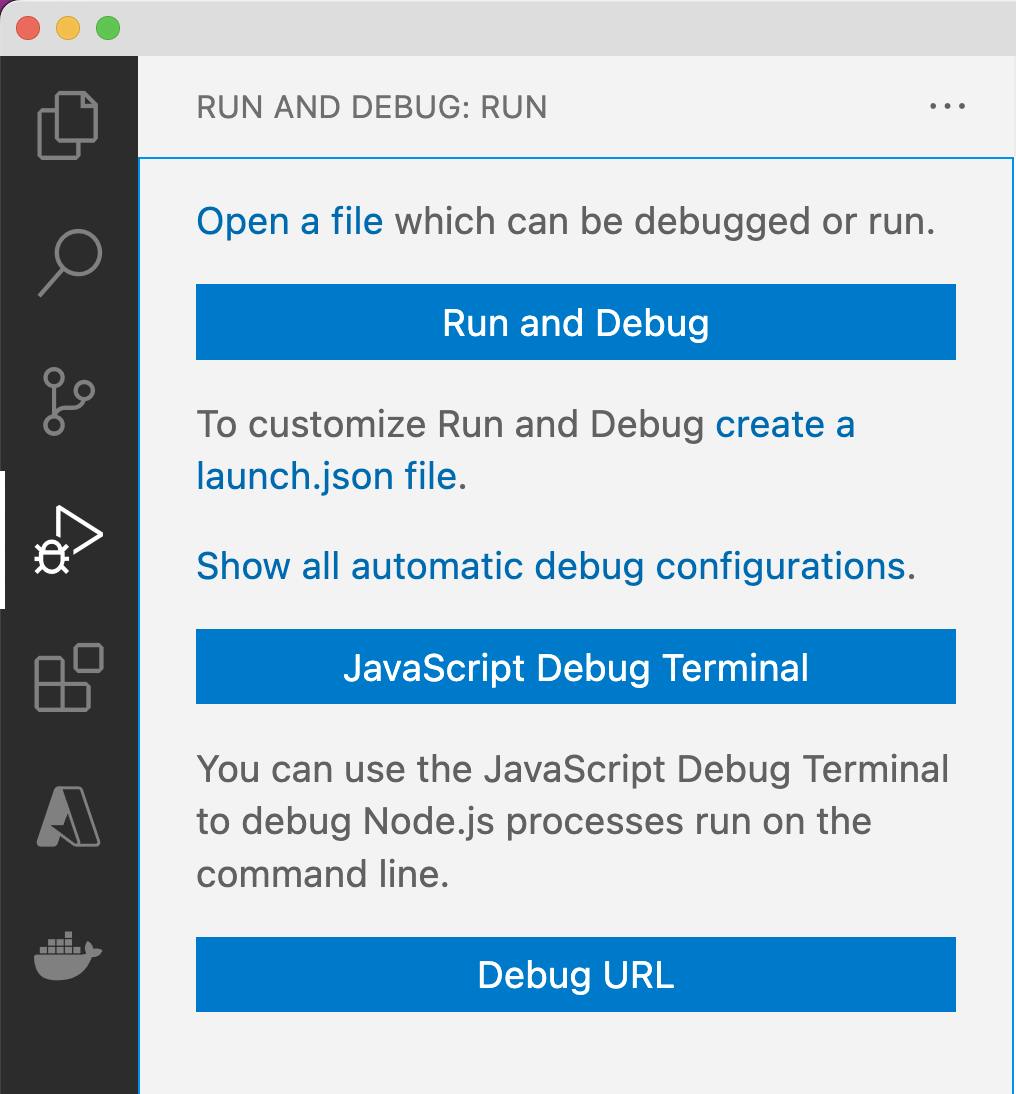

First, check if you have launch.json file created in your project. launch.json defines different types of configurations we can run for debugging. If it is not created, visit the RUN AND DEBUG tab on the left in your VS Code, as seen in the image below:

Click on the text that says create a launch.json file. Before you can proceed, it will ask the type of application to proceed. Select Node.js. It will create a new launch.json file in your project with a default Node.js configuration added.

Since we are not going to run the node application locally, go ahead and delete that configuration. Instead, replace the launch.json file with the following content:

{

"version": "0.2.0",

"configurations": [

{

// 1. Type of application to attach to

"type": "node",

// 2. Type of request. In this case 'attach'

"request": "attach",

// 3. Restart the debugger whenever it gets disconnected

"restart": true,

// 4. Port to connect to

"port": 9229,

// 5. Name of the configuration

"name": "Docker: Attach to Node",

// 6. Connect to /node directory of docker

"remoteRoot": "/node"

}

]

}

The configuration added is pretty self-explanatory. Basically, we are asking the debugger to connect to a remote host with port number 9229. We are also requesting the debugger to restart whenever it gets disconnected to the host. By default, the debugger tries to connect on http://localhost:9229/. But project is hosted inside the /node directory in docker. To map /node, the remoteRoot attribute is used.

Running the Project

That’s about it! Now, if you run docker compose up, your project will start running. For the first run, it will download some layers of the node slim SDK and then install nodemon inside the docker container. But, subsequent runs would be much faster. Running docker compose up will show the following output in your terminal:

docker compose up

In order to attach the debugger, run the Docker: Attach to Node task from the RUN AND DEBUG tab. The debugger will now attach to the /node directory of your docker container. Next, put a breakpoint on line 4 of your server.js file, i.e., response.json({ “super”: “app1” });. Finally, open your browser and hit http://localhost:3000. The breakpoint will be hit, and the execution will halt.

For production, we need to use the docker-compose-prod.yml file. To do so, we need to mention the file name in the docker command. Execute the following command to run the project as if in a production environment:

docker compose -f docker-compose-prod.yml up

With the above command, a debugger cannot be attached to the container since we are not exposing any debugging point.

Source Code

Here is the link to the final source code of the project we have created.

Conclusion

Debugging is one of the best things for development. It’s the cherry on top when we are able to debug remotely. Remote debugging enables us to connect to code running not only on the cloud but also to a docker container running locally.

I hope you have enjoyed this article. Feel free to check out some of my other articles: